ISYOURCOMPUTERARACIST?WEKNOWAI’S‘HOW’BUTWENEED‘WHY’

Kablamo's Allan Waddell recently explored the bleeding edge of Artificial Intelligence for The Australian.

Kablamo’s Allan Waddell recently explored the bleeding edge of Artificial Intelligence for The Australian.

*From the minute we wake up we’re generating data — the alarm clock on your smart phone, the first log into your email, the first news article you read. So much data is being collected, the only way to sort through it all and find the most valuable insights hidden within is to conscript the aid of algorithms and deep-learning neural networks.

While artificial intelligence has been taken up with an enthusiasm bordering on fanatical, it raises a slew of new, pressing, and even philosophical complications. For example, computers are notoriously bad at understanding human assumptions. Remember Microsoft’s AI chatbot Tay? Tay was created to answer questions on Twitter, learning from the user base to speak in the same way a millennial would. Due to the lack of any filters, Tay began spouting what can only be described as “hate-speech”, including Holocaust denial and admiration for Adolf Hitler.

Algorithms aren’t entirely to blame here. Humans have been trying to codify human ethics since before the Ancient Greeks and, so far, we have been largely unsuccessful. How can we codify what we ourselves can’t explain? For that matter, how can we be sure human biases aren’t being hard coded into AI? Solving the problem of regulating AI decision-making demands expertise from many disciplines, not just ethics, but computer science, philosophy, cognitive science, and computational linguistics. Nonetheless, the adoption of artificial intelligence is so rapid, many issues are left to basically sort themselves out by themselves — a potentially disastrous approach.

Here’s just one example. In the US, a piece of software called Correctional Offender Management Profiling for Alternative Sanctions (Compas) is used to assess the risk of defendants reoffending. The output of this algorithm is used to inform sentencing in many states. While the appeal of such software is clear, there’s just one problem — the program is racist.

Recent analysis showed that not only were black defendants nearly twice as likely to be misclassified as a higher risk compared with their white counterparts, but white defendants were also misclassified as “low-risk” much more often than black defendants.

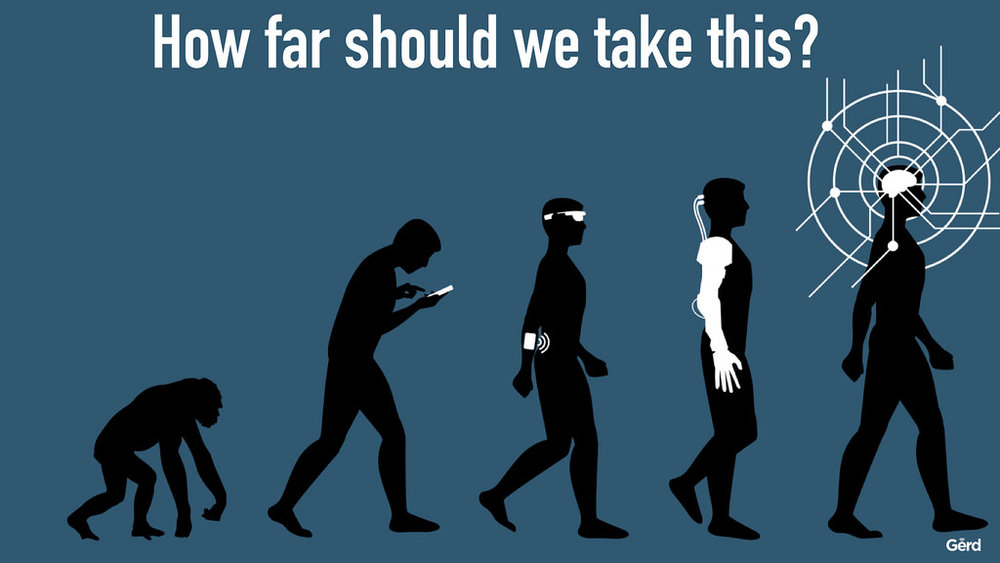

So, as more decisions are handed over to AI, regulating their behaviour could become increasingly important, but how do you do that without severely impairing the immense potential value of AI? Part of the challenge stems from the fact that while we can understand “how” an AI has reached a certain conclusion, discovering the “why” is much more problematic.

Assuming we could develop an introspective-neural network that an AI could use to justify its decisions, how do we know the justifications are true, and not just a fabrication to earn the approval of the network trainer? How would we know if the AI is telling us the truth or is just saying what we want to hear?

Anupam Datta of Carnegie Mellon University has been stress-testing such a neural network, which assigns scores to potential employees based on a variety of input parameters. These include standard data points like age, sex, and education, but also include parameters like weightlifting ability. Assuming your job requires lifting heavy objects, selecting for this parameter is important but it could also skew job offers towards males. To test this, some parameters were randomly swapped between the male and female candidates. If, after such swapping, the AI still skews male, it could indicate the algorithm is selecting men specifically, regardless of their qualifications. In other words, the AI is sexist for reasons beyond selecting for favourable employee parameters.

Our way of life, from search engines to advertising, rely on algorithms to sift through dizzying amounts of data to spot trends. Their usefulness doesn’t put them above scrutiny, however. In fact, a tool as powerful as AI needs to be as well-understood as possible. It’s important to answer these questions now, before the AI at job interviews, courtrooms or customs terminals starts drawing conclusions with no explanation.