AIANDTHEINTELLIGENCEEXPLOSION-DIRECTOR'SCUT

A few weeks ago Allan published some thoughts on AI in The Australian. What was published came from a longer exploratory piece which makes sense to publish here in full. Here it is...

A few weeks ago Allan published some thoughts on AI in The Australian. What was published came from a longer exploratory piece which makes sense to publish here in full. Here it is…

It’s a subject that’s been whispered about for decades. Since the dawn of computing, murmurs have circulated through technology circles at each new breakthrough. Occasionally, the whispering will explode for a couple days before melting back into the back rooms of tech conferences, companies, and research firms. One of these explosions took the world by storm when Deep Blue bested chess legend Garry Kasparov in 1997, the first time a computer triumphed over a human in a deeply complex battle of wits. Interest spiked even higher in 2015 after AlphaGo beat Lee Sedol spectacularly in a 5-game match of Go, and using a purely original move it knew a human professional would never play. (Go, by the way, is a game with a possibility space so huge it makes chess look like checkers, at least from a search perspective.

Various numbers are tossed around for possible board configurations in both games, but generally chess is stated to have somewhere between 10⁵⁰ and 10⁷⁰ options, falling short of the number of atoms in the universe,10⁷⁸, by a few orders of Magnitude. Go, on the other hand, has 10e10e48 possible configurations at a minimum. 10e10e48 is so astronomically enormous there no physical quantity to consider that will get you to the same galaxy as the ball park.) And in 2017, AlphaGo has been upgraded to AlphaGo Zero, a version that never played any humans, used an eighth of the hardware, and beat the previous AlphaGo 100 matches to 0.

While the inhuman power of artificial intelligence is fascinating and exciting to watch, the rapid growth is making some leading tech leaders anxious. Some call this phenomenon an intelligence explosion. Others call it the AI singularity. Whatever they call it, they use these terms as a warning to all of us humans that our days on the top might be coming to an end. Just as AI isn’t new, though, worries around AI stretch way back into the ‘50s, when the potential power of computers started to become clear. It was John von Neumann who coined the term “singularity” in terms of AI during the 1950s. The term “intelligence explosion” might have come from mathematician I. J. Good in 1965:

“Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.” Advances in Computers, vol. 6, 1965

And so modern scientists and techies, perhaps seeing how far we’ve come since the ‘50s and ‘60s, see this day of machine ultraintelligence as drawing near. Ray Kurzweil, a futurist and Director of Engineering at Google, says human-level intelligence might be just over 10 years away, with a prediction of 2029 for the year an AI passes the Turing test, and marked 2045 as the date for the singularity. Computer scientist Jürgen Schmidhuber, who some call the “father of artificial intelligence,” thinks that in 50 years, there will be a computer as smart as all humans combined. And Elon Musk, tech leader extraordinaire of PayPal, SpaceX, Tesla, and, recently, OpenAI, while not quite as prone to specific-date-prophesying, calls artificial intelligence a “fundamental existential risk for human civilization.”

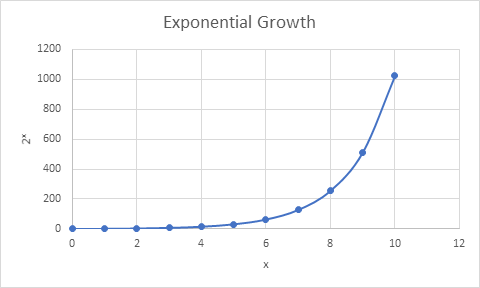

Where are these numbers coming from? And is this stuff even possible? On the one hand, the argument made by Musk and other singularity-worriers is pretty simple and basically centers around an exponential growth curve. Here’s a really simple one, a plot of 2x:

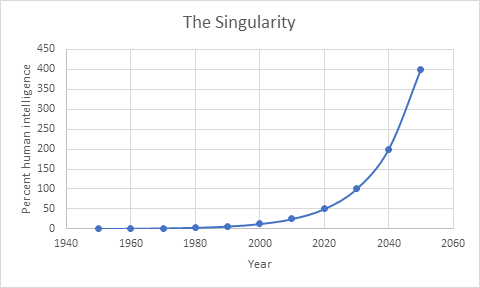

Except instead of plotting 2x, let’s put years along the x-axis and computer intelligence on the y-axis:

On this chart, it clearly takes a long time to reach 100% human intelligence, starting way back from 1940. However, once human intelligence is reached, it takes a very short time to reach 400% human intelligence. The argument goes essentially as laid out by I. J. Good: when computers improve themselves, they’ll get so smart so fast nobody will know what hit them. And this chart isn’t quite steep enough, because it’s modeled on 2x, a constant growth rate. In the case of ultraintelligent machines, not only is the growth accelerating, but the acceleration itself would start accelerating, creating an asymptote to infinity in the intelligence graph a short while after human intelligence is reached.

People who think like Musk look at our progress on one of these charts and think we’re just about spot-on. Here, approaching 2020, it’s not that crazy to call computers half as smart as people. They can perform pattern and sound recognition tasks, and play games, and perform billions of math problems per second. Looking back towards the ‘40s, when computers were very specialized and quite slow, it’s fair to call them mechanical calculators with no intelligence whatsoever. And with the explosive rate of technological development in the last century compared to the centuries before, it does seem like we’re going nowhere but up and to the right.

So, argues Musk, how is the future looking for us humans once computers are infinitely intelligent? What lies on the other side of that vertical intelligence wall on the chart, and how could we possibly plan to survive once we get there?

Before you let this get you too much in a twist, I’ve got some good news: while all this singularity stuff is very compelling, it is still pure speculation. In fact, many people who work in the artificial intelligence field just don’t see this kind of artificial intelligence explosion happening in the next 30-some years, if at all. As computer scientist Andrew Ng of Baidu put it, “There could be a race of killer robots in the far future, but I don’t work on not turning AI evil today for the same reason I don’t worry about the problem of overpopulation on the planet Mars.” Or, put another way by Toby Walsh, AI professor at UNSW, “stop worrying about World War III and start worrying about what Tesla’s autonomous cars will do to the livelihood of taxi drivers.” In essence, Ng and Walsh argue there are many more pressing concerns for humanity both from AI, like potential 33% unemployment due to automation, and from other factors, such as climate change or nuclear war, that worrying about a computer becoming ultraintelligent drains resources from more pressing matters. It’s also worth noting that, in the same article, Toby Walsh claims “most people working in AI… have a healthy skepticism for the idea of the singularity. We know how hard it is to get even a little intelligence into a machine, let alone enough to achieve recursive self-improvement.”

Ng and Walsh both forward the same sentiment; perhaps superintelligent AI is possible from an engineering standpoint, but it is so far away there are many more pertinent, critical issues to worry about. However, it is worth noting that there are some fundamental engineering concerns that need to be taken into account, too.

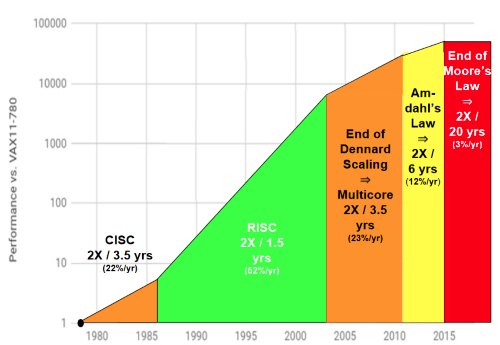

For one, it looks like Moore’s Law, which states that chips will host twice transistors for the same cost every 12-24 months, has finally died. While there’s been some residual debate, and while companies are still shrinking components, the rate has not been able to keep up. See this graph drawn up by Wall Street Journal technical writer Daniel Matte:

Look familiar? This is a logistic curve; it is also another possible model which could explain our apparent “intelligence explosion.”

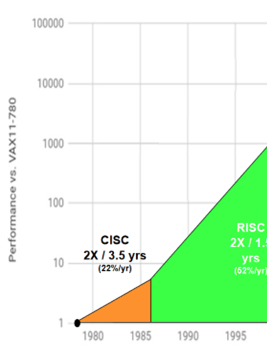

In 1995, when fervor for Moore’s Law was in full swing, a person looking at current and past trends would see:

This looks a whole lot like what artificial intelligence futurists look at now. However, due to some pernicious engineering limits of producing transistors below the 10-nanometer size range, it’s become much harder for companies to fit more transistors on chips and the progress curve has started to level off. This levelling-off also implies an asymptote, but a horizontal one, which is a much more common and manageable asymptote in areas of science (think of the speed of light, which we could theoretically approach, but never reach without doing away with a little thing called mass). Whether or not the limit reached is fundamental, it demonstrates that much more work will be required to continue making improvements, which we will touch on again later. It could be that in the next 10 years, instead of fostering in human-level AI intelligences, we’ll start to hit a computer-intelligence ceiling, forming another neat logistic curve. Furthermore, speculations about the near-infinite power of AI often rely on the idea that our processing power will continue to rapidly increase, which, as the death of Moore’s Law shows, might be an unfair assumption.

Another assumption worth examining is that size and processing power of neural networks leads to greater intelligence at all. It turns out that this assumption isn’t really true. As Bernard Molyneux, a cognitive science professor at UC Davis, put it, “if a neural network is too large for a data set, it learns the data by rote instead of making the generalizations which allow it to make predictions.” So the real trick in making an intelligent AI is forming the right relationship between computational power and data. However, striking a balance implies that overshooting or undershooting both cause a detriment to function, implying that such research would focus on finding a nearby intelligence maximum for a machine. Whether or not the maximum found is a true maximum or only a local maximum is a whole different can of worms and an ongoing dilemma in mathematic, but the point is that if there is some golden ratio which leads to an intelligence explosion, there’s absolutely no guarantee we could ever find it analytically, and the sheer number of ratios possible would vote against stumbling on it by luck. Obviously, those in AI can make still make improvements by studying this delicate balance and optimizing; what’s important to note is that AI intelligence requires improvements in areas which are historically confounding, like the link between the brain and intelligence, and progress in areas with historical growth (like processing power and neural network size) do not guarantee ultraintelligence at all.

There’s even historical evidence supporting both the idea that greater size and power don’t lead to greater intelligence, and that striking the perfect balance between neural network and data leads to great, but possibly maximum, intelligence. This claim comes from the only supercomputer older than 50 years: the human brain. Over the last 7 million years or so, the human brain has tripled in size, and at least half that growth occurred over the last two million years. This is a massive growth spurt, speaking in evolution terms, which usually occurs over 10s-or-100s-of-millions of years. However, since the development of cities about 10,000 years ago, the human brain actually shrank. And it is in the last 10,000 years that all of human development has occurred: agriculture, technology, sophisticated art, constructions of World Wonders, going to the moon, you name it. Also, take Neanderthals: evidence shows that Neanderthals had larger brains than Homo sapiens, and they didn’t make the evolutionary cut. It’s possible that due to our smaller brains, we humans were more able to generalize data and produce better survival strategies. So it could be that in our rapid brain growth and subsequent shrinkage, our brains were zeroing in on a more effective size for best intelligence, as determined by evolution. Evolution, after all, functions similarly to the way a neural network training algorithm does, making small tweaks and keeping the tweaks which improve chances of reproduction and survivability. So, perhaps evolution has already run this game of maximizing intelligence, and the result is us.

Finally, exponential arguments make the assumption that reaching higher levels of intelligence requires the same amount of effort. So it takes a person or computer x amount of time, processing, development, or neural connections to become half as smart as a person, and then after x amount of improvement again, human-level intelligence is reached. However, our current understanding of intelligence is limited, and it’s unclear that advancing through levels of intelligence is linear in this way. It could be that it takes x effort to become half-intelligent, and four times that much effort to reach full human intelligence. This heralds back to the previous discussion of Moore’s Law; just as we will continue to make improvements to microprocessors, we will continue to make improvements to AI. But, eventually the free improvements run out, and making further improvements gets really hard. If a computer wants to become ultraintelligent through runaway recursive improvement, the recursive improvement could become difficult more quickly than the computer becomes more intelligent. This provides another exponential curve to counteract the AI advancement curve, which would increase upwards pressure and imply logistic-shaped intelligence growth.

So, is an artificial intelligence explosion impossible? We don’t know. If you side with Kurzweil and Musk, the singularity is downright inevitable. Technology moves forward, after all, and therefore intelligence will advance. As Walsh claims, though, those in the trenches of AI are skeptical. In fact, Walsh worries more about unintelligent AIs, which can lead to fatal autonomous car accidents like the one in Florida this year. It could be that improving AI is critical to keeping ourselves safe as these systems become more ubiquitous, and real danger comes from stupid machines.

At the end of the day, it’s unclear whether the singularity is coming. That being said, it will be very interesting to see which side comes out on top. From one angle, betting against a catastrophic end to the world has always been a surefire way to bet correctly. On the other side, though, is Musk, and not many would bet against what his engineering teams can achieve. We’ll just have to wait and see.